This is the code relative to the paper

Francesco Croce, Matthias Hein

University of Tübingen

https://arxiv.org/abs/1905.11213

We introduce a regularization scheme which aims at expanding the linear regions of ReLU-networks in both L1- and Linf-sense. We show that in this way we are able to achieve simultaneously provable robustness wrt all the Lp-norms for p>=1.

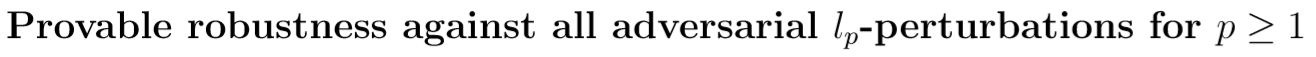

We compute the largest Lp-balls contained, first, in the union of an L1- and an Linf-ball and, second, in the convex hull of that union, noticing that the latter is significatly larger than the former.

Then, exploiting this observation, we extend the Maximum Margin Regularizer of (Croce et al, 2019) to our new MMR-Universal, which provides models which are provably robust according to the current state-of-the-art certification methods based on Mixed Integer Programming or its LP-relaxations.

All the models trained with MMR-Universal reported in the paper and the datasets required to run the code can be found in the folders models and datasets available here.

To train a CNN with MMR-Universal:

python train.py --dataset=mnist --p=univ --gamma_l1 1.0 --gamma_linf 0.15 --lmbd_l1 3.0 lmbd_linf 12.0 --nn_type=cnn_lenet_small --exp_name=cnn_mmr_univ

Note that this is an extension of the MMR implementation,

so it is possible to train MMR models wrt a single norm with

--p 2 or --p inf.

More details about the parameters available in train.py.

eval.py combines multiple methods to calculate empirical and provable robust error:

python eval.py --n_test_eval=100 --p=inf --dataset=mnist --nn_type=cnn_lenet_small --model_path=/path/to/model.mat

The supported norms are --p inf, --p 2 and --p 1. Note that at the moment the evaluation of empirical

robustness wrt the L1-norm is not integrated.

All main requirements are collected in the Dockerfile.

The only exception is MIPVerify.jl and Gurobi (free academic licenses are available).

For this, please use the

installation instructions

provided by the authors of MIPVerify.jl. But note that Gurobi with a free academic license cannot

be run from a docker container.

Also note that we use our own forks of kolter_wong (Wong et al, 2018) and

MIPVerify.jl (Tjeng et al, 2018) libraries.

And that in attacks.py we redefine the class MadryEtAl of Cleverhans library to support

normalized L2 updates for the PGD attack.

@inproceedings{

croce2020provable,

title={Provable robustness against all adversarial $l_p$-perturbations for $p\geq 1$},

author={Francesco Croce and Matthias Hein},

booktitle={International Conference on Learning Representations},

year={2020},

url={https://openreview.net/forum?id=rklk_ySYPB}

}